Reading course descriptions and degree plans has helped me understand more about the fields of artificial intelligence and data science. I think some universities have whipped up a program in one of these hot fields of study just to put something on the books. It’s quite unfair to students if this is just a collection of existing courses and not a deliberate, well structured path to learning.

I came across this page from Northeastern University that attempts to explain the “difference” between artificial intelligence and machine learning. (I use those quotation marks because machine learning is a subset of artificial intelligence.) The university has two different master’s degree programs for artificial intelligence; neither one has “machine learning” in its name — but read on!

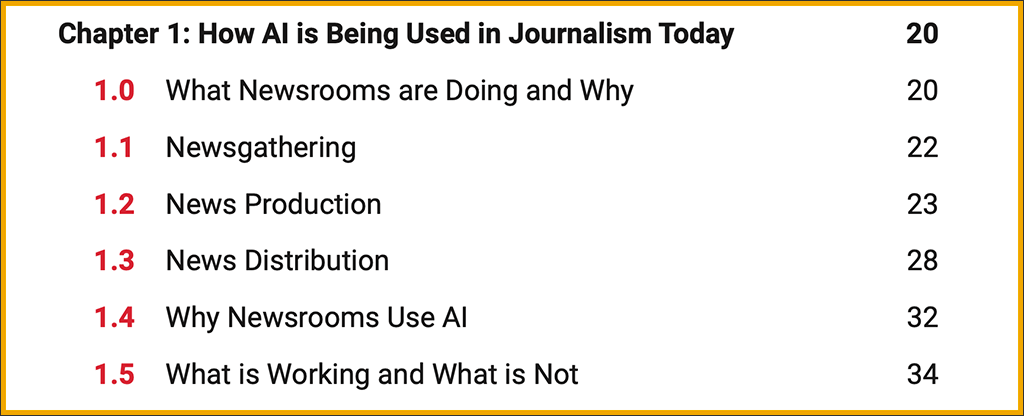

One of the two programs does not require a computer science undergraduate degree. It covers data science, robotics, and machine learning.

The other master’s program is for students who do have a background in computer science. It covers “robotic science and systems, natural language processing, machine learning, and special topics in artificial intelligence.”

I noticed that data science is in the program for those without a computer science background, while it’s not mentioned in the other program. This makes sense if we understand that data science and machine learning really go hand in hand nowadays. A data scientist likely will not develop any new machine learning systems, but she will almost certainly use machine learning to solve some problems. Training in statistics is necessary so that one can select the best algorithm for use in machining learning for solving a particular problem.

Graduates of the other program, with their prior experience in computer science, should be ready to break ground with new and original AI work. They are not going to analyze data for firms and organizations. Instead, they are going to develop new systems that handle data in new ways.

The distinction between these two degree programs highlights a point that perhaps a lot of people don’t yet understand: people (like journalists who have code experience) are training models — using machine learning systems through writing code to control them — and yet they are not people who create new machine learning systems.

Separately there are developers who create new AI software systems, and engineers who create new AI hardware systems. In other words, there are many different roles in the AI field.

Finally, there are so-called AI systems sold to banks and insurance companies, and many other types of firms, for which the people using the system do not write code at all. Using them requires data to be entered, and results are generated (such as whose insurance rates will go up next year). The workers who use these systems don’t write code any more than an accountant writes code. Moreover, they can’t explain how the system works — they need only know what goes in and what comes out.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.